WASHINGTON – While cyborg soldiers and fully automated weapons have long been fodder for futuristic sci-fi thrillers, they are now a reality and, if the Pentagon gets its way, will soon become the norm in the U.S. military. As Defense One reported last Thursday, the Army had just concluded a live-fire exercise using a remote-controlled ground combat vehicle complete with a fully automated machine gun. The demonstration marked the first time that the Army has used a ground robot providing fire in tandem with human troops in a military exercise and, as Defense One noted, “it won’t be the last.”

Indeed, last week’s exercise represents just the latest step in the Pentagon’s relatively quiet tip-toe into converting the U.S. Armed Forces to a machine-majority force. Faced with low recruitment and an increased demand for soldiers, the Department of Defense is seeking to solve that problem altogether while also increasing the military’s firepower and force in combat.

Though unmanned aerial vehicles (UAV), better known as drones, are the most well known of these devices, the Pentagon has been investing heavily — for decades — in a cadre of military robots aimed at dominating air, sea, and land. In 2010, the Pentagon had already invested $4 billion in research programs into “autonomous systems” and, since then, its research wing — Defense Advanced Research Projects Agency, better known as DARPA — has been spending much of its roughly $3 billion annual budget funding robotic research intended for use in military applications.

According to former U.K. intelligence officer John Bassett, DARPA’s investments in robotics and automated weapons will not only quickly become the norm in the U.S. military, they will soon replace humans, who are set to become a minority in the U.S. military in a matter of years. During a recent speech, Basset – a 20-year veteran of the British spy agency Government Communication Headquarters (GCHQ) and very familiar with U.S. military research – warned that the U.S.’ attempts to “stay ahead of the curve” will result in the Pentagon’s deployment of thousands of robot soldiers over the next few years. The upshot, according to Basset, is that the U.S. Army will have “more combat robots than human soldiers by 2025” – just seven years from now.

Read more by Whitney Webb

- Lifting of US Propaganda Ban Gives New Meaning to Old Song

- How “America First” Became the Presidency of the Pentagon

- Palantir: The PayPal-offshoot Becomes a Weapon in the War Against Whistleblowers and WikiLeaks

- FBI Whistleblower on Pierre Omidyar and His Campaign to Neuter Wikileaks

The mechanization revolution in the military, however, isn’t set to stop there. According to the Army’s official Robotic and Autonomous Systems (RAS) strategy, the Army plans to have autonomous “self-aware” systems “fully integrated into the force” between 2031 and 2040 along with the complete automation of logistics. The strategy also states that, by that time, the Army will have a cadre of robots at its service including “swarm robots” that will be “fully powered, self-unpacking and ready for immediate service,” along with advanced artificial intelligence designed to “increase combat effectiveness,” particularly in urban combat zones.

Now that ground robots are undergoing testing alongside human soldiers, the Army’s vision along with Basset’s prediction are set to become the new reality of the U.S. military.

Robots that are smarter, faster, stronger and, of course, don’t die

Ground robots like those used in the recent Army exercise are hardly the only type of robot soldier soon to be at the Pentagon’s disposal. Weapons manufacturers have been all too eager to comply with the Pentagon’s growing demand for automated war machines and have already developed a variety of such devices, to the delight of senior military officials.

In 2015, U.S. weapons giant Lockheed Martin began working with the military to develop automated networks that manage complex missions involving both unmanned aerial and ground vehicles, an effort still underway. That same year, the Navy began deploying underwater drones. A year later in 2016, Boeing – now the largest defense contractor in the U.S. — launched an unmanned submarine designed for exploratory missions as well as combat, followed by DARPA’s release of an autonomous drone ship to be used for hunting down enemy submarines, set to be used by the U.S. Navy later this year.

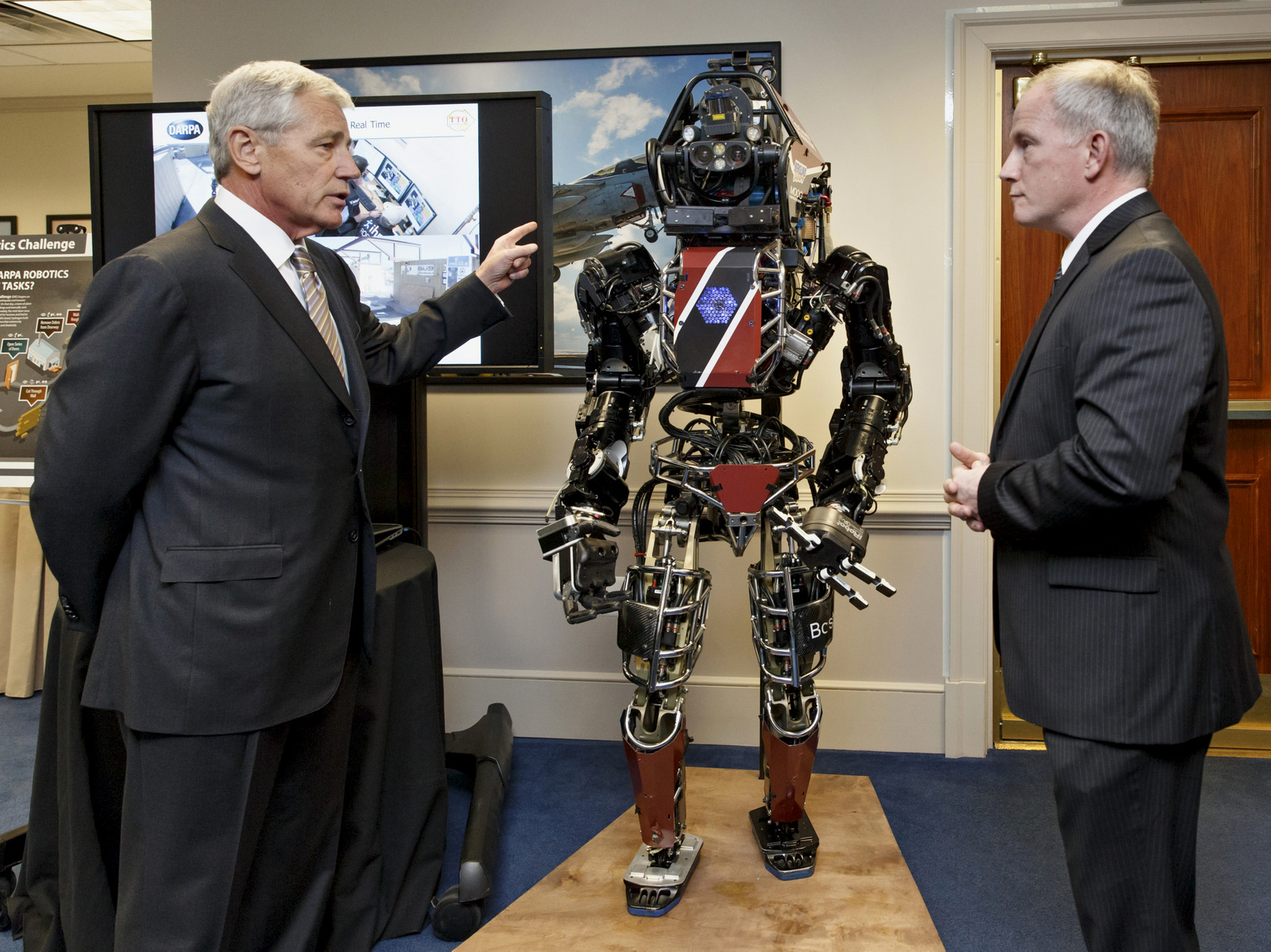

While such machines have been advertised as combat aids to human soldiers, DARPA has also been working on developing so-called “killer robots” — i.e., robot infantry set to replace human soldiers. Many of these robots have been developed by the Massachusetts-based and DARPA-funded company Boston Dynamics, whose veritable Sears Catalog of robots includes several models designed specifically for military use.

One of those robots, dubbed “Atlas,” is capable of jumping and backflips, carrying heavy loads, navigating uneven terrain, resisting attacks from a group of humans and even breaking through walls. Another Boston Dynamics robot, called “WildCat” can run at sustained speeds of nearly 20 miles per hour. By comparison, a gifted human runner can briefly sprint at about 16 miles per hour.

Of course, the Pentagon’s interest in robotic warfare systems is not limited to machines alone. As The Atlantic reported in 2015, DARPA has been focused on “transforming humans for war” since 1990 by combining man and machine to create a “super soldier.” In 2001, DARPA made its first major advance in this area when it unveiled two exoskeleton programs. By 2013, this had evolved into a “super soldier” suit known as TALOS (Tactical Assault Light Operator Suit), which is complete with imaging systems, cooling/heating, an oxygen tank, embedded sensors and more. It is expected to be fully functional by later this year.

Most of DARPA’s other “super soldier” programs remain secret, however. Of those that are acknowledged, the most disconcerting is the “Brain-Machine Interface,” a project first made public by DARPA at a 2002 technology conference. Though its goal was to create “a wireless brain modem for a freely moving rat,” which would allow the animal’s movements to be remotely controlled, DARPA wasn’t shy about the eventual goal of applying such brain “enhancement” to humans in order to enable soldiers to “communicate by thought alone.”

Even this went too far for some defense scientists, who warned in a 2008 report that “remote guidance or control of a human being” could quickly backfire were an adversary to gain access to the implanted technology, and who also raised concerns about the moral dangers of such augmentation.

DARPA, for its part, seems hardly concerned with such possibilities. Michael Goldblatt, director of the DARPA subdivision Defense Sciences Office (DSO), which oversees the “super soldier” program, told journalist Annie Jacobsen that he saw no difference between “having a chip in your brain that could help control your thoughts” and “a cochlear implant that helps the deaf hear.” When pressed about the unintended consequences of such technology, Goldblatt stated that “there are unintended consequences for everything.”

Ethical killer robots

While those “unintended consequences” may not keep DARPA higher-ups like Goldblatt awake at night, concerns about the Pentagon’s plan to embrace a mechanized future have been common outside of the military. In attempts to quell those concerns, the Pentagon has repeatedly assured that humans will always remain in control when it comes to making life-and-death decisions and that they are putting special care into preventing robots from engaging in unintended attacks or falling prey to hackers.

They have even enlisted experts to help add “ethics” so its robotic soldiers will not violate the Geneva Conventions and will “perform more ethically than human soldiers.” This may seem a rather low bar to those who are aware of the Pentagon’s egregious record of human rights violations and war crimes. If the Pentagon’s use of drones is any indication, the military’s “ethical” use of automated killing machines is indeed suspect.

Previous reporting has shown that those who doubt the Pentagon’s professed concern over preventing “unethical” consequences resulting from its development of a robot army are right to do so. As journalist Nafeez Ahmed reported in 2016, official U.S. military documents reveal that humans in charge of overseeing the actions of military robots will soon be replaced by “self-aware” interconnected robots, “who” will both design and conduct operations against targets chosen by artificial-intelligence systems. Not only that, but these same documents show that by 2030 the Pentagon plans to delegate mission planning, target selection and the deployment of lethal force across air, land, and sea entirely to autonomous weapon systems based on an advanced artificial intelligence system.

If that weren’t concerning enough, the Pentagon’s AI system for threat assessment is set to be populated by massive data sets that include blogs, websites, and public social media posts such as those found on sites like Twitter, Facebook and Instagram. This AI system will employ such data in order to carry out predictive actions, such as the predictive-policing AI system already developed by major Pentagon contractor Palantir. The planned system that will control the Pentagon’s autonomous army will also seek to “predict human responses to our actions.” As Ahmed notes, the ultimate idea – as revealed by the Department of Defense’s own documents — is to identify potential targets — i.e,. persons of interest, and their social connections, in real-time by using social media as “intelligence.”

What could possibly go wrong?

Armed with a budget of over $700 billion for the coming year – which will likely continue to grow over the course of Trump’s Pentagon-controlled presidency — the Pentagon’s dystopian vision for the future of the military is quickly becoming a question not of if but when.

Not only does it paint a frightening picture for future military operations abroad, it also threatens, given the rapid militarization of law enforcement, to drastically change domestic policing. The unknowns here are beyond concerning. And the unintended consequences of manufacturing a self-policing army of self-aware killing machines – without human emotion, experience, or conscience – could quickly become devastating. Worse still, they are, like genies let out of bottles, not so easily undone.

Top Photo | A graphic from the U.S. Army’s official Robotic and Autonomous Systems (RAS) strategy.

Whitney Webb is a staff writer for MintPress News who has written for several news organizations in both English and Spanish; her stories have been featured on ZeroHedge, the Anti-Media, and 21st Century Wire among others. She currently lives in Southern Chile.